Underrated Ideas Of Tips About How To Reduce Multicollinearity

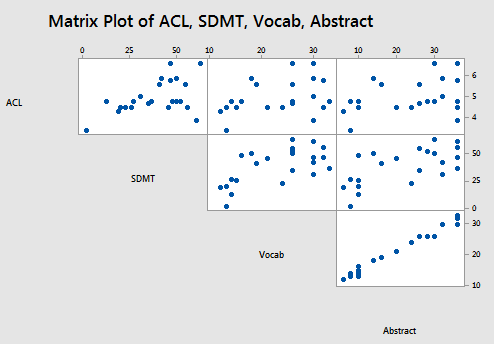

There are lots of questions/answers about how to implement pca.

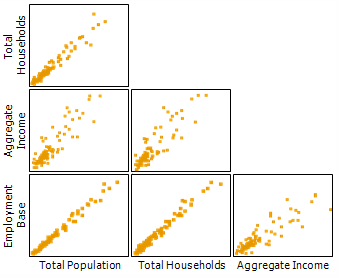

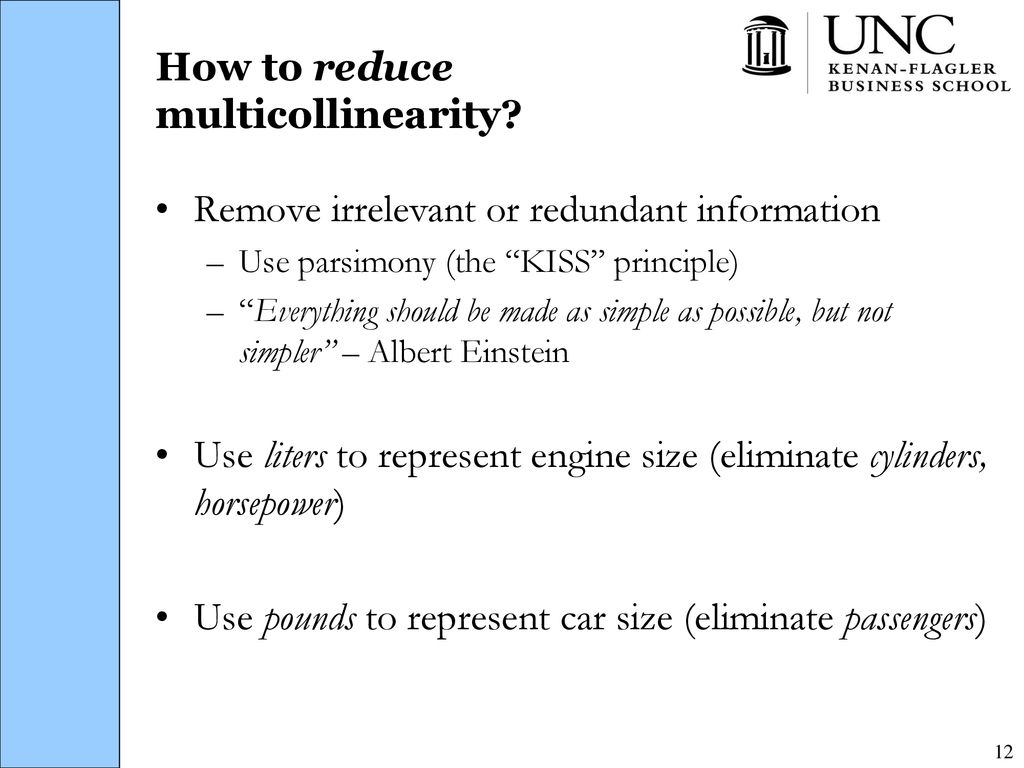

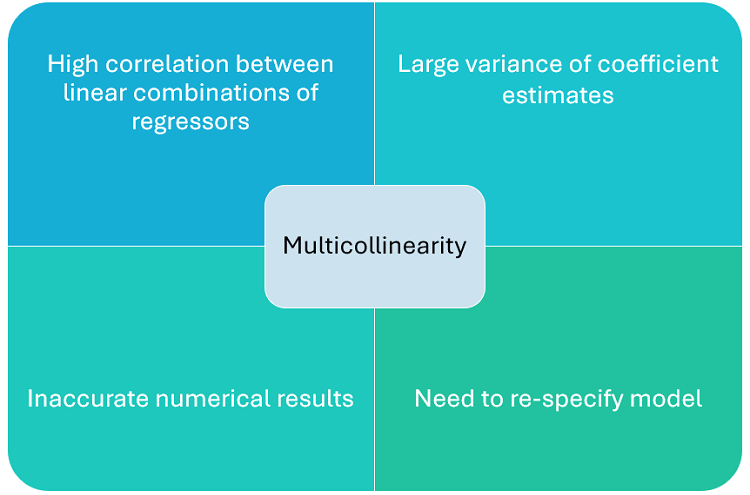

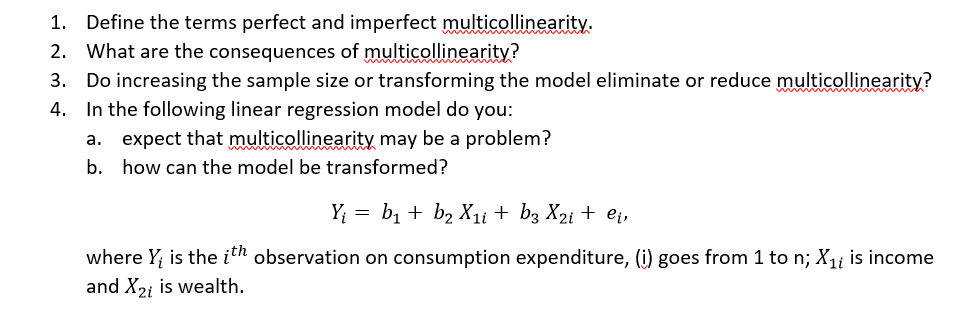

How to reduce multicollinearity. Removing features is not recommended at first. The impact of multicollinearity can be reduced by increasing the sample size of your study. A straightforward method of correcting multicollinearity is removing one or more variables showing a high correlation.

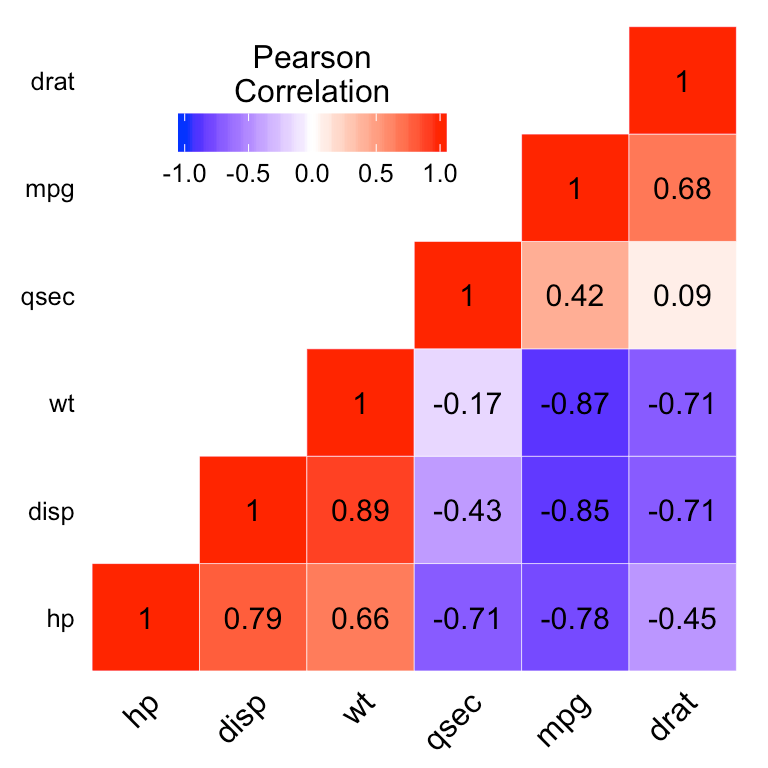

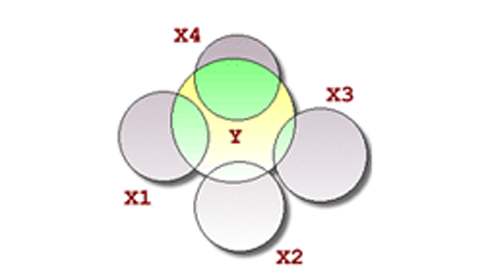

To reduce the amount of multicollinearity found in a model, one can remove the specific variables that are identified as the most collinear. Multicollinearity only affects the predictor variables that are correlated with one. We can use ridge or lasso regression because in these types of regression techniques we add an extra lambda value which penalizes some of the coefficients for.

To reduce multicollinearity we can use regularization that means to keep all the features but reducing the magnitude of the coefficients of the model. Well, the need to reduce multicollinearity depends on its severity. The idea is to reduce the multicollinearity by regularization by reducing the coefficients of the feature that are multicollinear.

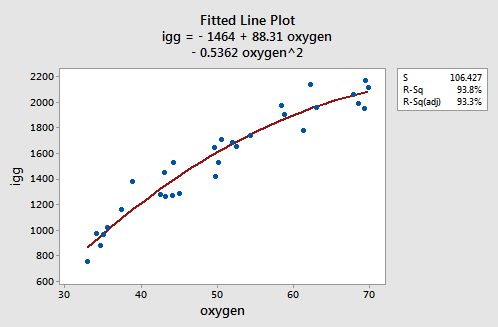

The idea is to reduce the dimensionality of the data using the pca algorithm and hence remove the variables with low variance. To remove multicollinearities, we can do two things. This assists in reducing the multicollinearity linking.

If there is only moderate multicollinearity, you likely don’t need to resolve it in any way. This method allows you to group similar. However, in many econometric textbooks, you will find that.

You can reduce multicollinearity using pca. You can also reduce multicollinearity by centering the variables. By increasing the alpha value for the l1.